4.3. Examples

4.3.1. Install 3D Assets

The 3D visualization of voraus.operator can be easily extended with additional 3D assets. The goal of this example is to configure the scene from Fig. 25.

Fig. 25 3D Scene in voraus.operator

In general, a scene can contain multiple assets in GLB format (.glb) and has the following structure.

<ENVIRONMENT_NAME>/

├── <assetPackage1>/

│ ├── <assetPackage1>.env.json

│ └── <assetPackage1>.glb

├── <assetPackage2>/

│ ├── <assetPackage2>.env.json

| ├── <assetPackage2>.glb

│ └── <assetPackage2>.glb

└── ...

Each asset package is configured using a JSON configuration file (.json), which allows you to define transformations and dependencies between individual objects.

Getting Started

Create Bind Mount

A configured scene must be placed under /srv/voraus/voraus-hmi/assets/environments. To mount the scene from the

filesystem of the host machine into the Docker container, a bind mount is used. This is done by modifying the stack

configuration (described in Access the voraus.core software stack) under volumes. The source entry

corresponds to the path of the folder in which the scene is subsequently configured.

volumes:

- type: bind

source: /home/workspace/environments

target: /srv/voraus/voraus-hmi/assets/environments

Note

When using WSL, the Windows file system can be accessed via /mnt/c/Users/<user name>.

For further information, see also Bind mounts.

Download Assets

In order to reproduce the example, the GLBs that are used can be downloaded from the following links:

Implementation Steps

Create folder structure

Inside the source folder defined in the stack configuration file, a folder is created for the scene, which will later contain all assets. For the example, it is named PICK_AND_PLACE_ENVIRONMENT. According to the following structure, additional subfolders for the asset packages are created.

PICK_AND_PLACE_ENVIRONMENT/

├── base/

│ ├── base.env.json

│ └── base.glb

└── table_with_boxes/

├── table_with_boxes.env.json

└── table_with_boxes.glb

Configuration

The folder names of each asset package are used as identifiers, and thus determine the name of the subordinate configuration files.

The robot base configuration base.env.json is defined as follows:

{

"version": "1.1",

"offset": {

"x": 0,

"y": 0,

"z": -0.54,

"rx": 0,

"ry": 0,

"rz": 0

},

"id": "base",

"parentID": "origin",

"assets": [

{

"asset": "base.glb",

"objectName": "cube_base",

"transformToZUp": true

}

]

}

The following parameters can be set:

version: Format of the configuration file

offset: Transformation of the asset

id (optional): Identifier to position other assets relative to it

parentID: Identifier of the parent object. With origin the asset is positioned relative to the Robot CS

assets: List of assets, with

asset: Relative path to the GLB file

objectName: Name of the object within the GLB file

transformToZUp (optional): Transform objects with Y-up convention

offset (optional): Transformation of the asset

Note

Each asset package may contain any number of GLB files which can be configured using the JSON configuration file.

The GLB file table_with_boxes.glb contains both the table and the two boxes. Each of these objects

is integrated by its object name using the configuration file. Individual assets can be transformed relative to the

asset package.

{

"version": "1.1",

"offset": {

"x": 0.3,

"y": 0.25,

"z": -0.54,

"rx": 0,

"ry": 0,

"rz": 0

},

"id": "table",

"parentID": "origin",

"assets": [

{

"asset": "table_with_boxes.glb",

"objectName": "cube_table"

},

{

"asset": "table_with_boxes.glb",

"objectName": "cube_box_red"

},

{

"asset": "table_with_boxes.glb",

"objectName": "cube_box_green",

"offset": { "y": -0.05 }

}

]

}

Troubleshooting

If the scene is not displayed as desired, check whether the following applies:

The naming convention for the folder names of asset packages and configuration files is followed.

The relative path from the configuration file to the GLB file is correct.

The defined object name of an asset exists in the referenced GLB file.

The objects within the GLB file do not have an unexpected transformation.

4.3.2. Tutorial: How to integrate a SensoPart camera with Python

Introduction

This tutorial will demonstrate how a SensoPart V20 camera manufactured by SensoPart Industriesensorik GmbH can be integrated using Python. The SensoPart cameras communicate using the TCP/IP protocol. Once a job is configured on the camera, it can be triggered via TCP/IP.

To configure a SensoPart camera, additional software is necessary. Please refer to the Useful links section for the software and its documentation.

Getting Started

Useful links

Requirements

Download the VISOR software.

Connect the SensoPart camera to your computer and open the VISOR software to configure the camera according to the documentation.

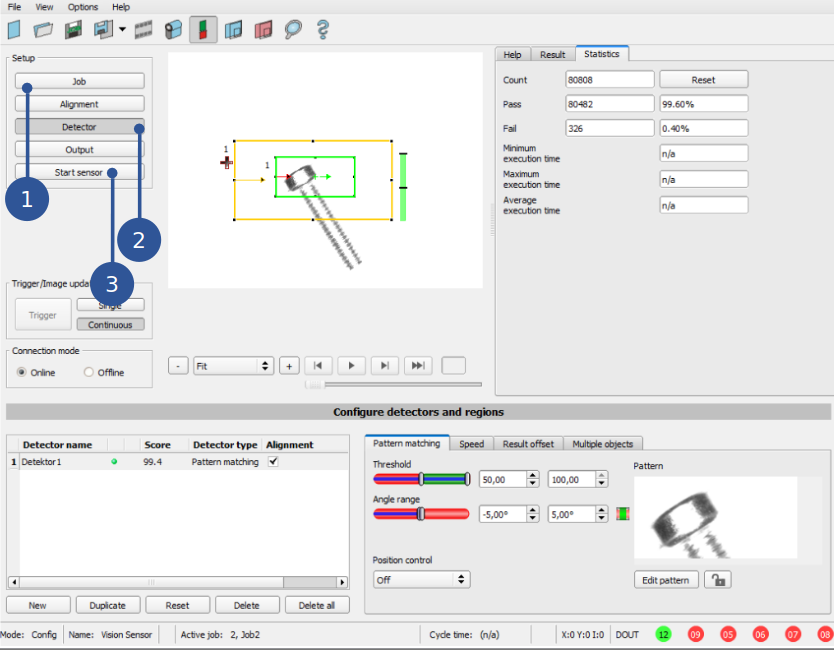

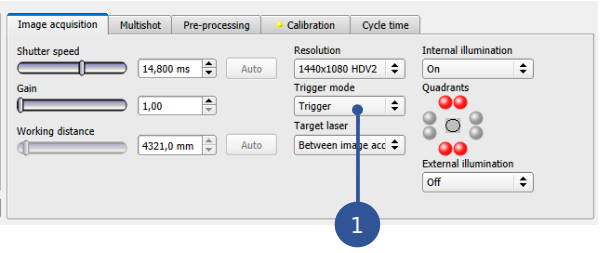

Configure the job (Fig. 26/➊) of the camera using your preferred detector (Fig. 26/➋) according to the documentation.

Set the trigger mode to “Trigger” like shown in Fig. 27/➊

Fig. 26 SensoPart configuration overview

Fig. 27 SensoPart job configuration panel

Implementation Steps

Configuring the data output

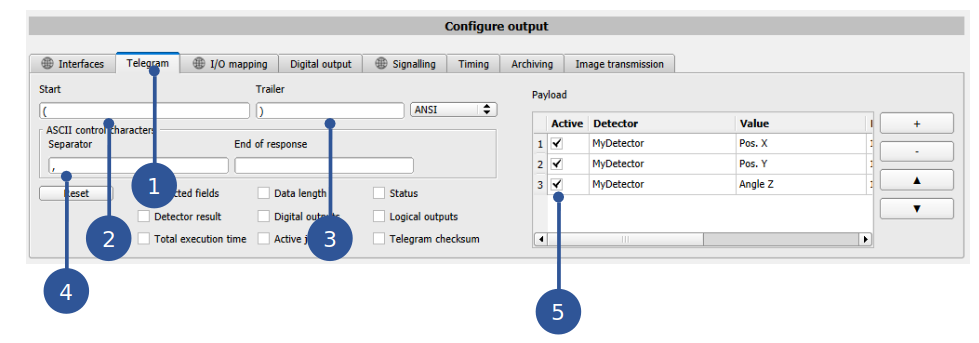

Once the job is properly configured, open the Telegram tab (Fig. 28/➊). In this tab, the data output can be configured.

Set the Start identifier (Fig. 28/➋) to “(” and the Trailer identifier (Fig. 28/➌) to “)”.

Set the Separator (Fig. 28/➍) to “,”.

In the payload on the right side, tick the boxes for the Pos. X, Pos. Y and Angle Z (Fig. 28/➎).

Please refer to the VISOR Software documentation for further information on how to configure the data output.

If everything is properly configured (see Fig. 28), click on Start sensor (Fig. 26/➌) to upload the job to the connected camera. Afterwards the camera can be disconnected from your computer and be moved somewhere else.

Fig. 28 SensoPart output configuration panel

Writing a Python TCP/IP client for the camera

The code section below (Basic SensoPart Python class.) provides a client implementation to communicate with a SensoPart camera via TCP/IP. The main functionalities include establishing a connection, triggering a job, and retrieving the results.

1import socket

2import string

3

4

5class V20:

6 def __init__(self, device_host: string, device_port: int = 6001, timeout: int = 10):

7 """

8 Initialize the client for the sensopart TCP socket.

9

10 Args:

11 ipAddress (string): _description_

12 """

13 self.device_host = device_host

14 self.device_port = device_port

15 self.timeout = timeout

16 self.is_connected = False

17 self._attempt_connection_if_necessary()

18

19 def _connect(self) -> None:

20 # Create a socket object (IPv4 address and TCP protocol)

21 try:

22 self.client_socket.connect((self.device_host, self.device_port))

23 self.is_connected = True

24 print(

25 f"Connection to the TCP socket {self.device_host}:{self.device_port} was successful!"

26 )

27 except socket.error as exc:

28 print(f"Failed to establish connection with the Sensopart camera: {exc}")

29

30 def disconnect(self):

31 self.client_socket.close()

32

33 def _attempt_connection_if_necessary(self):

34 if not self.is_connected:

35 # Socket needs to be reopened, cannot attempt connect twice

36 self.client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM)

37 self.client_socket.settimeout(self.timeout)

38 self._connect()

39

40 def trigger_job(self):

41 self._attempt_connection_if_necessary()

42 try:

43 # Send the "trigger" command

44 trigger_command = "TRG"

45 self.client_socket.sendall(trigger_command.encode("utf-8"))

46

47 except socket.error as exc:

48 print("Error in trigger_job(): ", exc)

49

50 def read_results(self):

51 self._attempt_connection_if_necessary()

52 try:

53 response = ""

54 while ")" not in response:

55 # Receive a chunk of data

56 chunk = self.client_socket.recv(1).decode("ascii")

57

58 response += chunk

59

60 # Check if the received response is valid

61 # if ")" in response and any(val != "0" for val in response.strip("()").split(",")):

62 # Extract values from the response string

63 object_pos = response.strip("()").split(",")

64

65 # Convert values to floats and rename variables

66 x_pos, y_pos, z_rot = map(lambda v: float(v) / 1000, object_pos)

67

68 # Print values with units

69 print(f"x_pos: {x_pos:.3f} mm, y_pos: {y_pos:.3f} mm, z_rot: {z_rot:.3f}°")

70

71 return x_pos, y_pos, z_rot

72

73 except socket.error as exc:

74 print("Error in read_results(): ", exc)

75 raise exc

Line 5: This class contains the TCP/IP client for the SensoPart camera.

Line 6: The __init__ constructor initializes the client with the provided device host, port, and timeout settings. The initialization is executed automatically when an instance of the class is created.

Line 19: This private method establishes a connection to the SensoPart camera.

Line 30: This method closes the connection to the SensoPart camera. This is especially useful since most cameras only allow to be accessed by one resource at the time. If the connection is not closed properly, the user might need to restart the camera before they can reestablish a connection.

Line 33: This method checks if a connection is established and attempts to connect if not. Useful to avoid redundant connection attempts.

Line 40: This method triggers a job on the SensoPart camera.

Line 50: This method reads the results of a job triggered on the SensoPart camera. The response syntax has been configured in Configuring the data output before. It continuously receives data until it encounters a closing parenthesis “)”. It then parses the received data, extracts the object’s position values, converts them to floating-point values in millimeters and degrees, and prints them with units. Upon successful execution, it returns a tuple containing the object’s x and y position and z rotation.

If any errors occur during the connection process or while reading results, error messages are printed and exceptions are raised.

The following code section gives an example on how to use the V20 class during runtime:

#Establish connection

client = V20(device_host = "192.168.100.100", device_port = 2006)

client.trigger_job()

(x_pos, y_pos, z_rot) = client.read_results()

print(f"Pose of detected object: x:{x_pos}mm, y:{y_pos}mm, z:{z_rot}°")

client.disconnect()

Troubleshooting

Error Message |

Comment |

|---|---|

Failed to establish connection with the SensoPart camera: timed out |

Check the cameras network settings in the VISOR software and the used IP address and port. |

Error in trigger_job()/read_results(): |

Check whether the connection was interrupted. |

The program is stuck in the read_results() method |

Check if the data output was configured correctly in the VISOR software. Did you miss a closing “)”? |

The results are all zeros |

No object was detected. Try a different illumination of the scene and make sure that the job is configured correctly in the VISOR software |

4.3.3. Tutorial: How to integrate a SensoPart camera with the voraus.operator

Introduction

In Tutorial: How to integrate a SensoPart camera with Python you learned how to communicate with a SensoPart V20 camera manufactured by SensoPart Industriesensorik GmbH using Python. This tutorial will demonstrate how the previously written Python code can be integrated directly into a new program with the voraus.operator.

Getting Started

Note

The SensoPart camera used for this tutorial was configured with the IP address 192.168.100.200. The port 2006 was used for the request channel and the port 2005 for the response channel. This might be different for your setup, depending on your camera network settings. Just replace these values in the tutorial according to your needs. It is also possible to configure the SensoPart camera in a way that one port is used for both channels. Please refer to the documentation mentioned in Tutorial: How to integrate a SensoPart camera with Python.

Requirements

A running voraus.core with the voraus.operator. Follow the installation instructions of your voraus.core version in voraus.core.

You followed the Tutorial: How to integrate a SensoPart camera with Python. Especially the configuration of the SensoPart camera with the VISOR software was already successful.

You already know the basics of how to use the voraus.operator, e.g. how to create a new program and how to add commands.

Implementation Steps

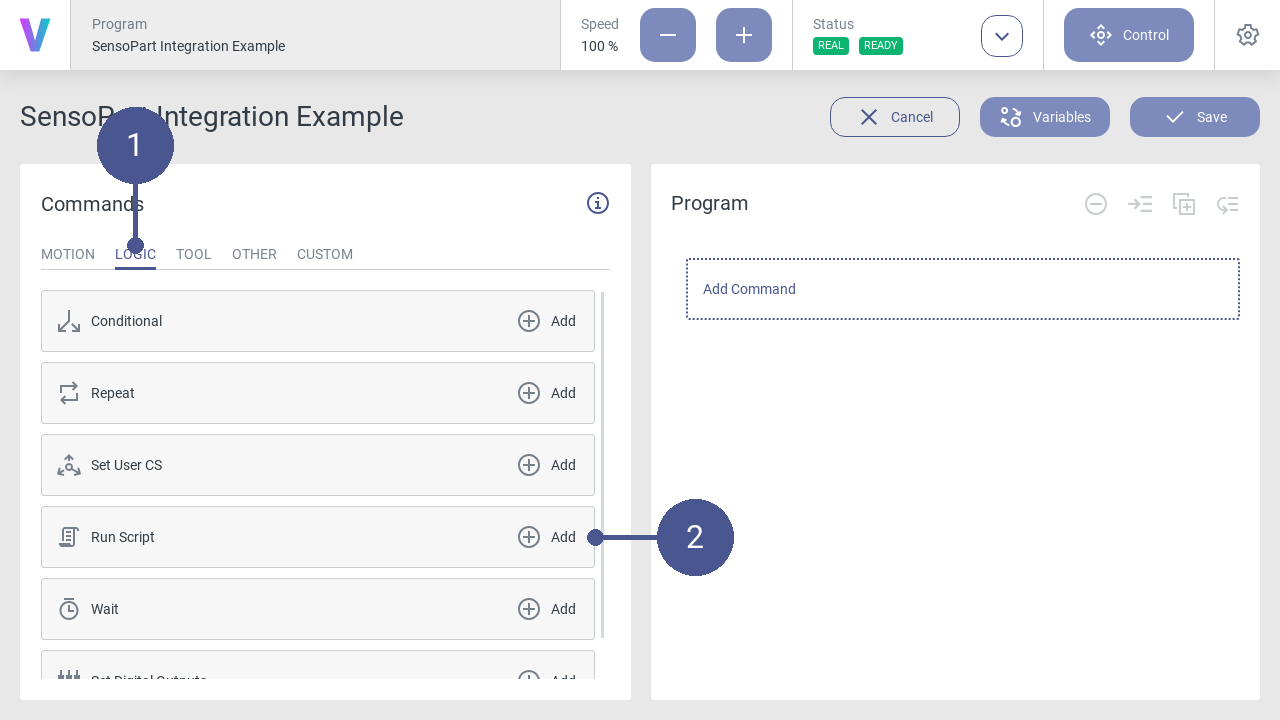

Create and load a new program in the voraus.operator called “SensoPart Integration Example”.

- For the first command, add a Python code block containing the base class for the camera communication.

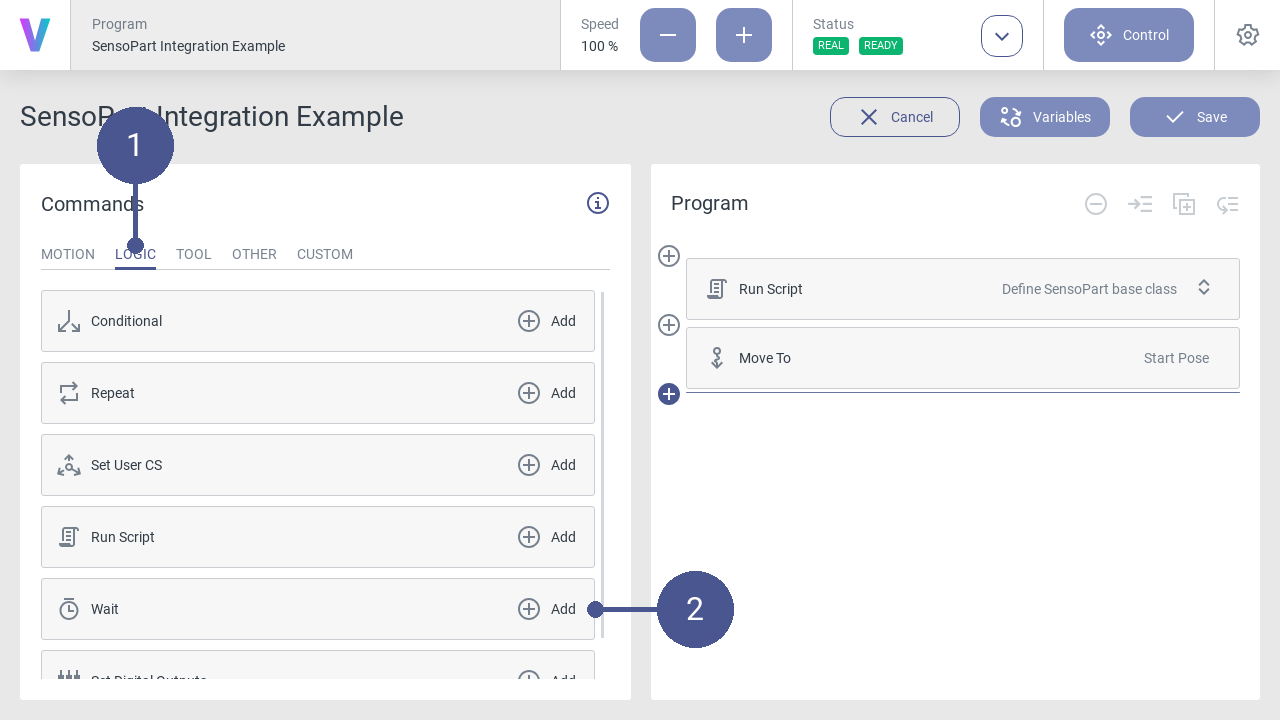

Navigate to the LOGIC tab (Fig. 29/➊) and select the Run Script command (Fig. 29/➋)

Fig. 29 Adding a Python code block to the program

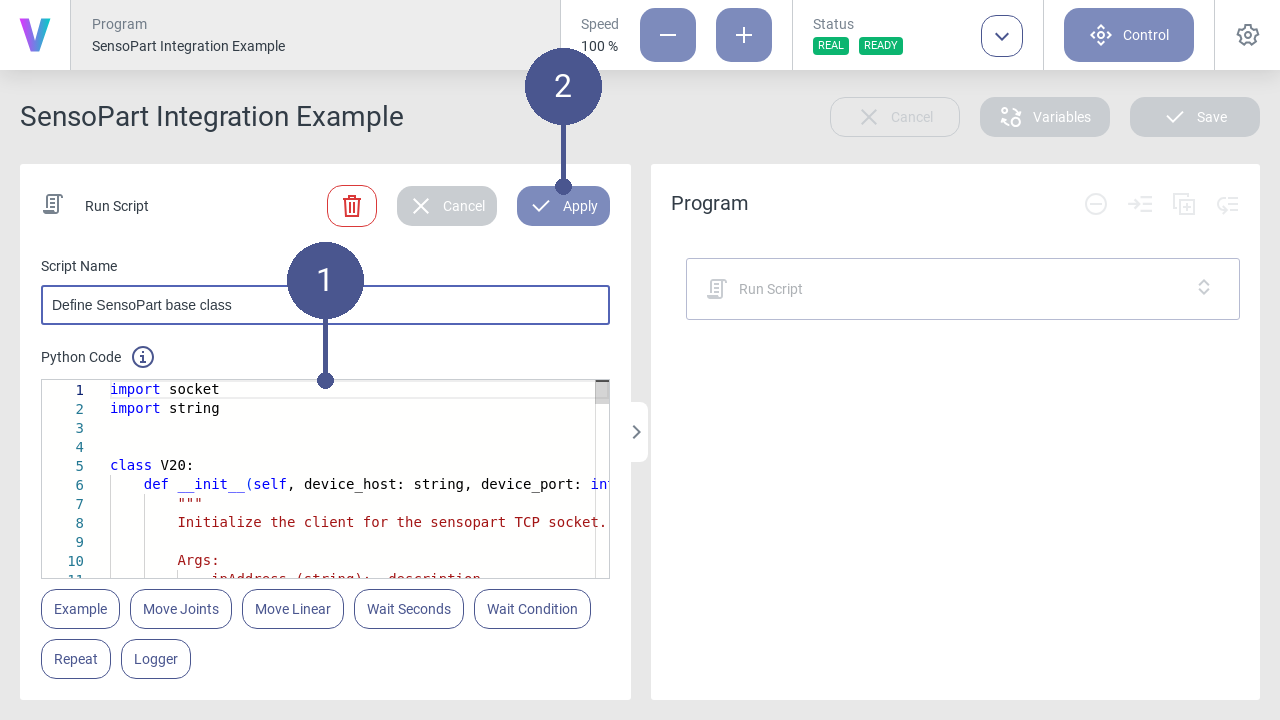

Copy and paste the content of the Python base class described in Tutorial: How to integrate a SensoPart camera with Python into the text field (Fig. 30/➊). Afterwards click on Apply (Fig. 30/➋)

Fig. 30 Defining the base class

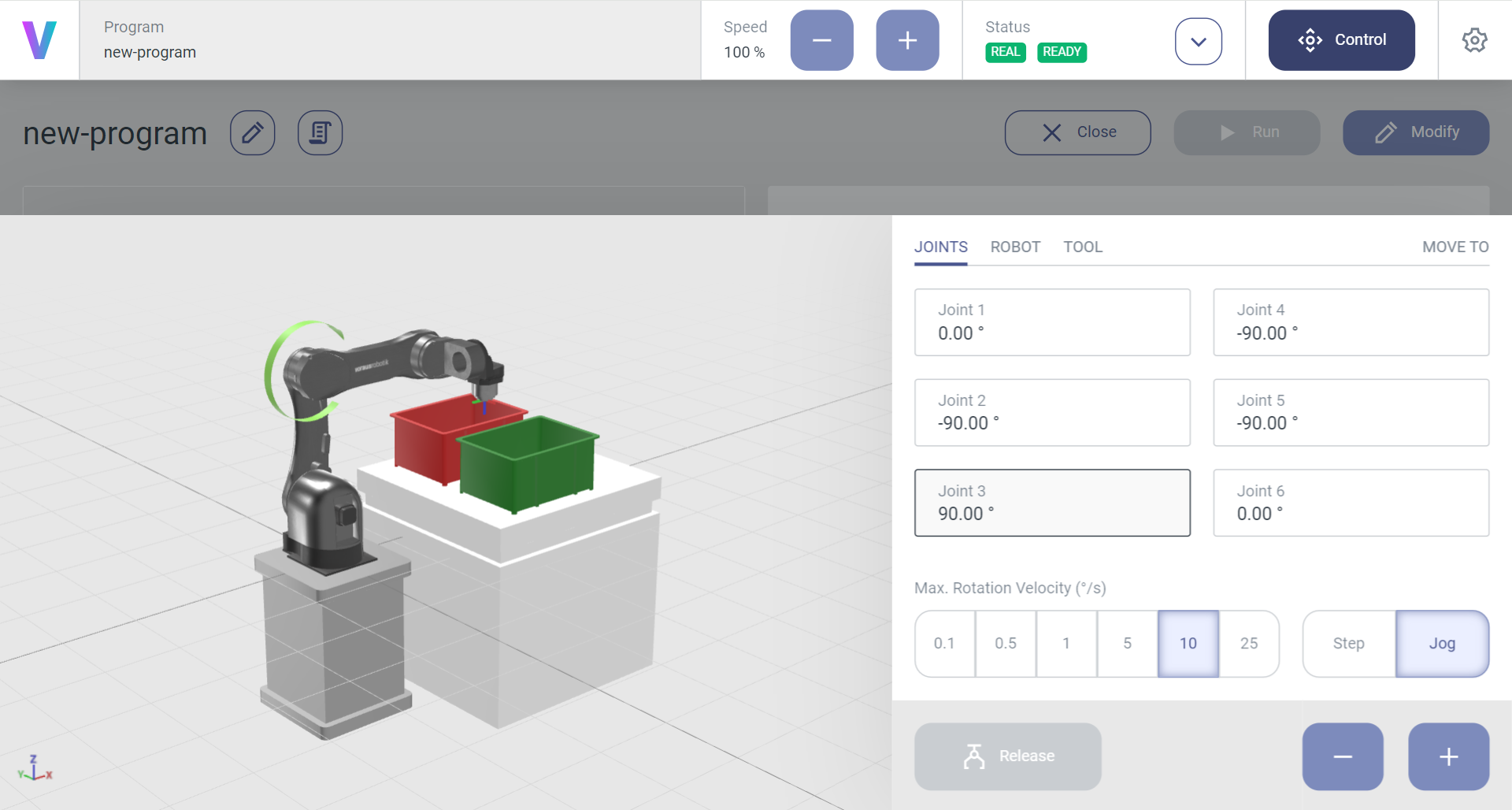

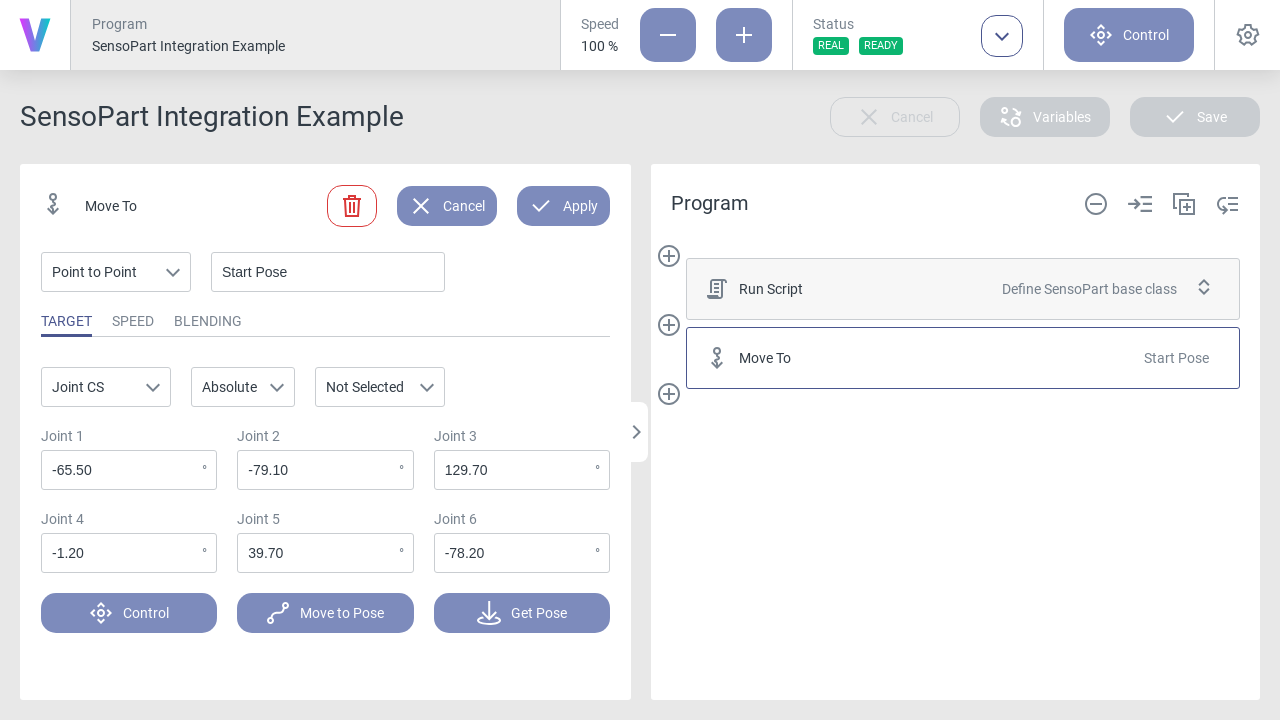

Next, add a Move To motion command to the starting pose of the program. The robot should be moved in such a way that the view of the camera is not obscured (Fig. 31).

Fig. 31 Adding a starting pose

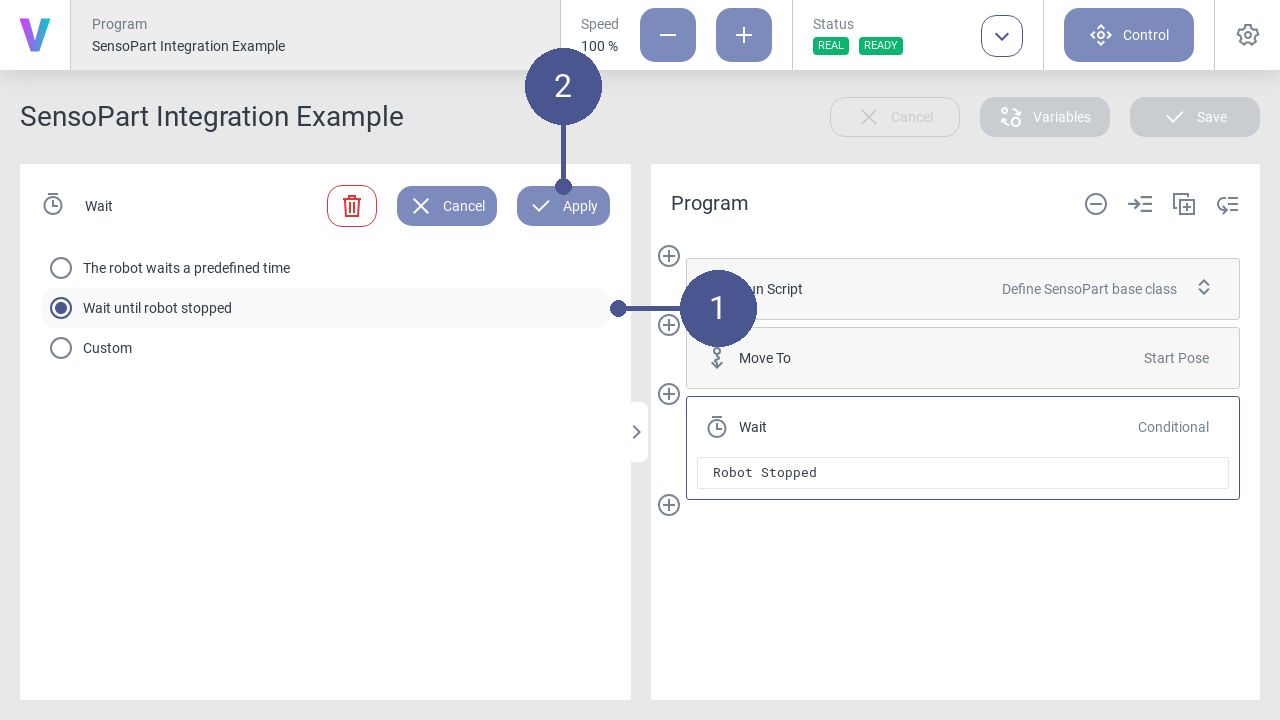

Before including another Python code block for detecting the object, add a Wait command, to wait for the robot to stop moving. Otherwise, the detection might trigger, although the starting pose was not reached yet.

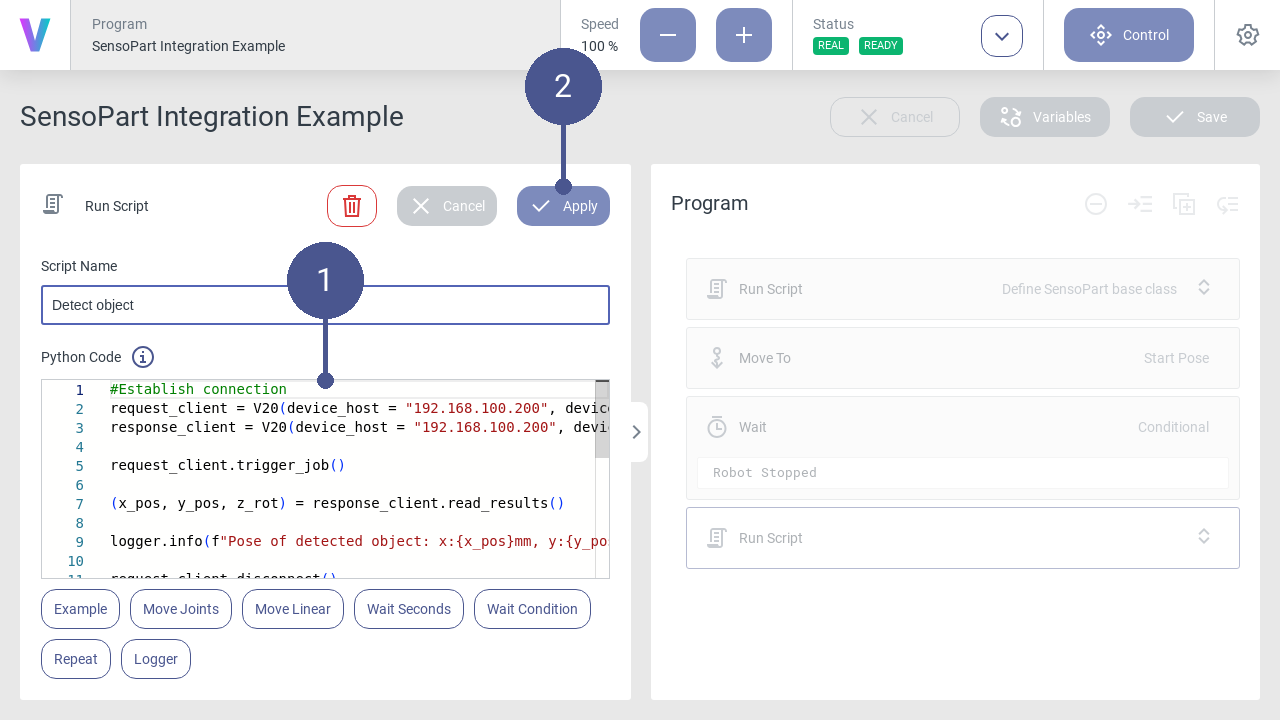

As in Fig. 29, add another Python code block in which the detection is triggered and the result is parsed (Fig. 34). The content (Fig. 34/➊) looks like the following:

1 #Establish connection 2 request_client = V20(device_host = "192.168.100.200", device_port = 2006) 3 response_client = V20(device_host = "192.168.100.200", device_port = 2005) 4 5 request_client.trigger_job() 6 7 (x_pos, y_pos, z_rot) = response_client.read_results() 8 9 logger.info(f"Pose of detected object: x:{x_pos}mm, y:{y_pos}mm, z:{z_rot}°") 10 11 request_client.disconnect() 12 response_client.disconnect() 13 14 if x_pos == 0.0: 15 returnIn this code block, two instances of the base class are initialized, one for the request channel and one for the response channel (Lines 2 & 3). Afterwards, the detection is triggered from the request client (Line 5) and the detection result is read out with the response client (Line 7). The connection to both clients is closed in Lines 11 & 12. In case that no object was detected, x_pos, y_pos, and z_rot will be all zero, terminating the program. This way, the robot does not try to move to the invalid pose (Line 14).

After the command is configured, click on Apply (Fig. 34/➋).

Fig. 34 Adding a code block for detecting the object

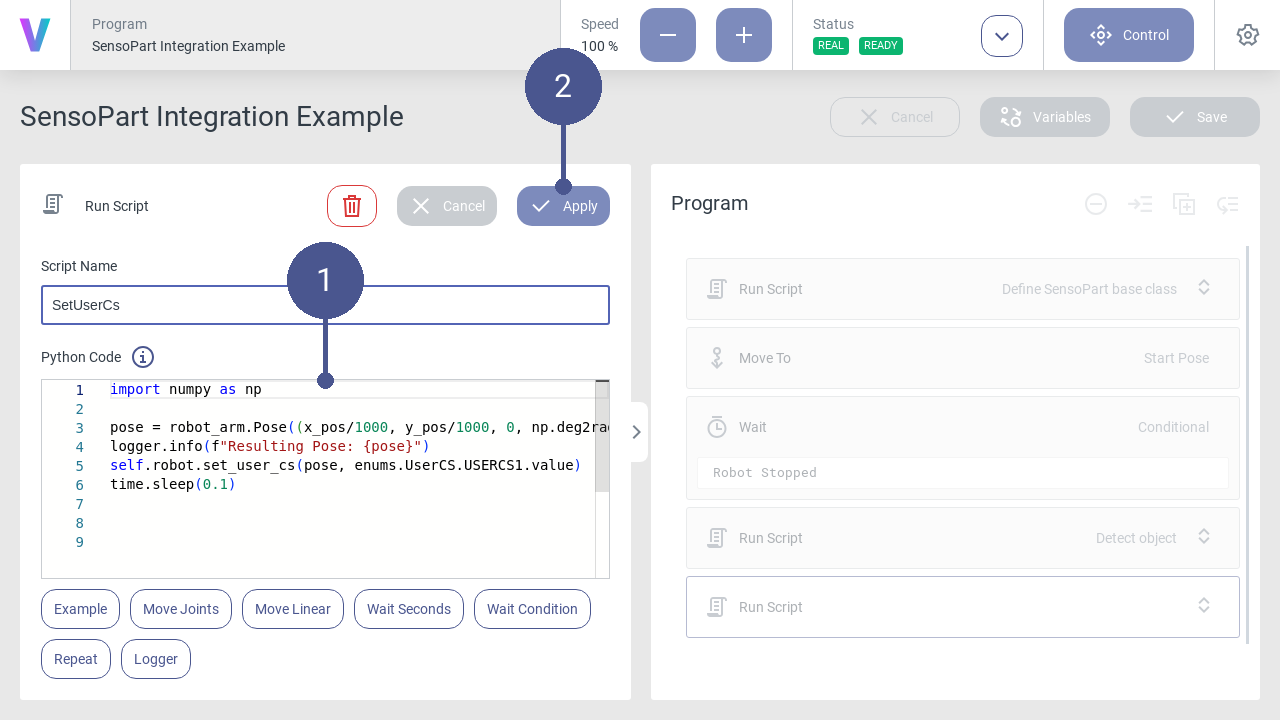

Add an additional Python code block that saves the detection result to USERCS1 (Fig. 35). The content (Fig. 35/➊) looks like the following:

1 import numpy as np 2 3 pose = robot_arm.Pose((x_pos/1000, y_pos/1000, 0, np.deg2rad(-180),0, np.deg2rad(z_rot)), enums.UserCS.ROBOTCS.value) 4 logger.info(f"Resulting Pose: {pose}") 5 self.robot.set_user_cs(pose, enums.UserCS.USERCS1.value) 6 time.sleep(0.1)Note

The x-rotation of the USERCS1 is set to -180° (Line 3) because the tool coordinate system is flipped by 180° with respect to to the robot coordinate system.

The time.sleep(0.1) at the end (Line 6) ensures that the coordinate system is saved before it is used.

Fig. 35 Adding a code block for setting a UserCS

After the command is configured, click on Apply (Fig. 35/➋).

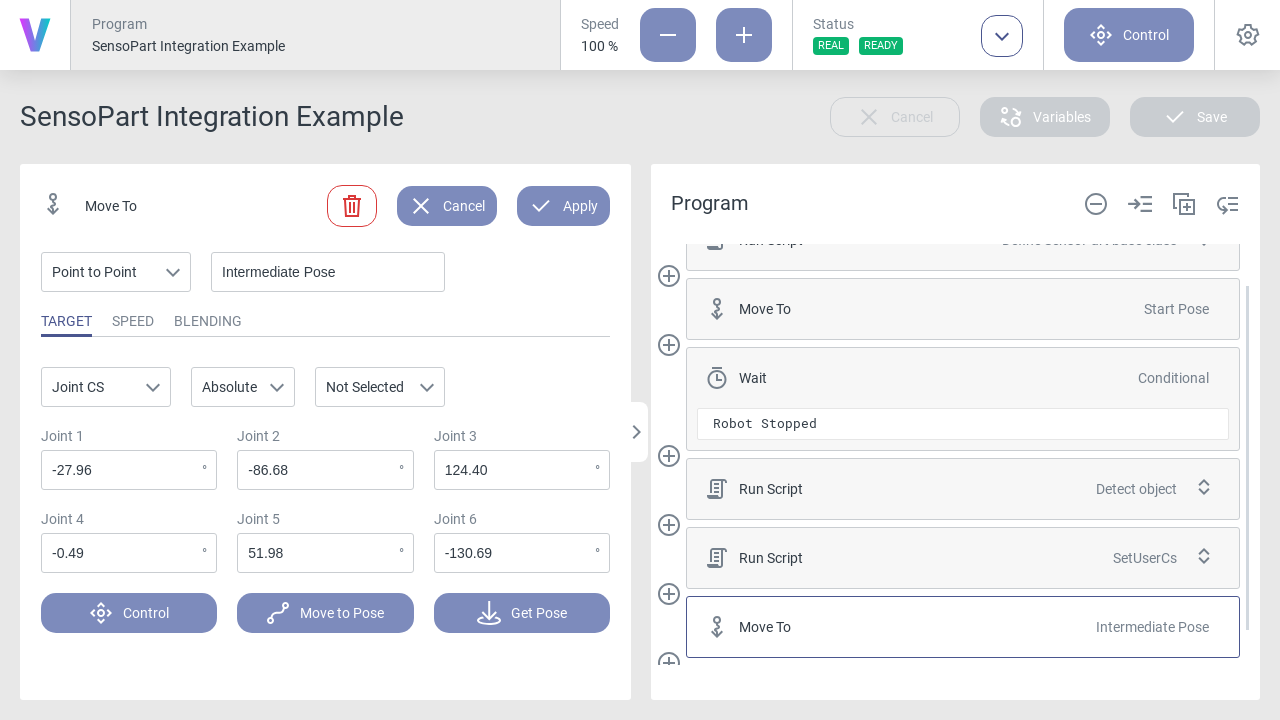

Depending on the workspace of the robot, it might be necessary to add some intermediate poses, so that the robot can move from the starting pose to the object pose without causing a collision with the environment. Add a Move To command containing the intermediate pose (Fig. 36).

Fig. 36 Adding an intermediate pose

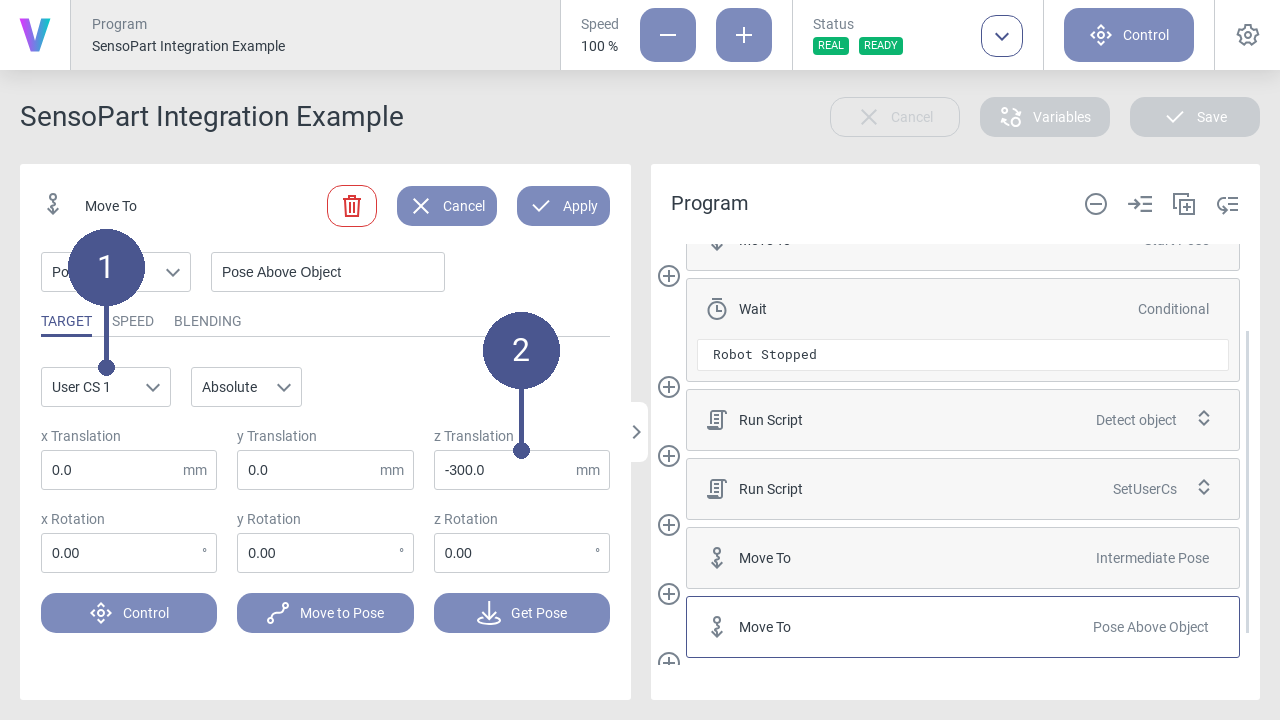

Now move to the detected object using the USERCS1 which was defined earlier. Add a Move To command and select the User CS 1 as the coordinate system (Fig. 37/➊). If you want to move to the center of the object (the detected coordinates represent the object’s center), keep the x Translation and y Translation values at 0.0. In this example, it is desired to move 300 mm above the object. Therefore, set the z Translation to -300 mm (Fig. 37/➋).

Note

Setting the z Translation to 0.0 could be used to grasp the object.

Note how negative values for the z Translation have to be used, due to the rotation of the USERCS1 by 180° around the x-axis.

Fig. 37 Adding a pose above the object

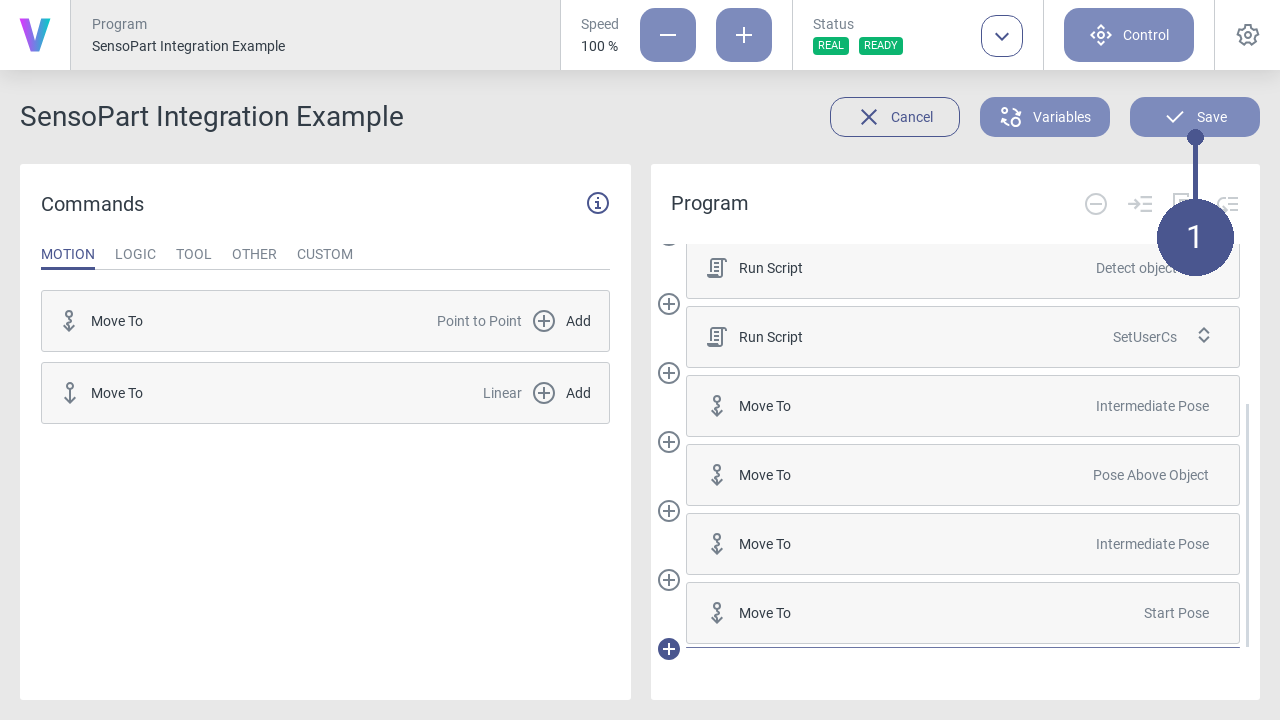

Finally, add two more Move To commands. One moving back to the intermediate position and one moving back to the starting position. The final program can now be saved (Fig. 38/➊).

Fig. 38 Saving the program

Troubleshooting

Error Message |

Comment |

|---|---|

Program is idling for some time, then the program terminates with an error. |

Timeout when trying to establish a connection to the camera. Make sure the network settings of the camera are correct. |

The program terminates without an error, but the robot does not move. |

No object was detected, make sure the camera’s detection job is configured correctly. |